Multirate Training of Neural Networks

In this work we propose multirate training of neural networks.

We illustrate that there exist latent multiple time scales in deep learning applications (e.g., WideResNet-16 on patch-augmented CIFAR-10 data) and propose a multirate method that can learn different features present in the data by training the network on different timescales simultaneously.

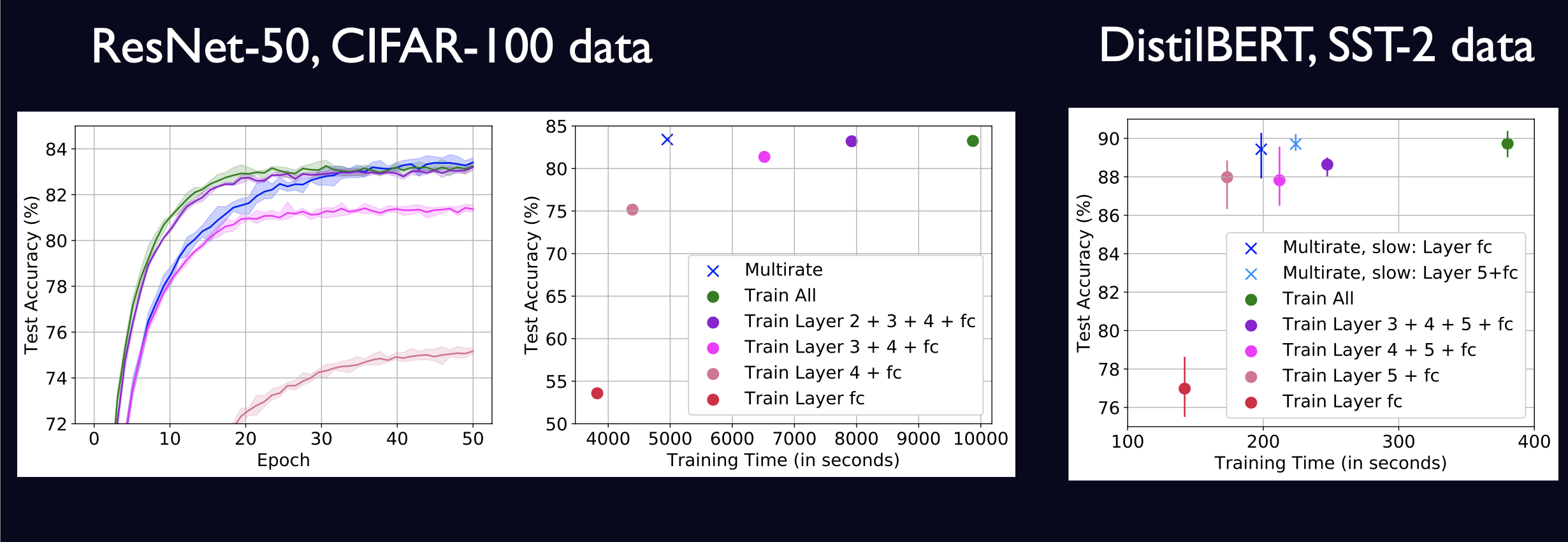

Further, we use a multirate method (that partitions the network into “fast” and “slow” parts and uses linear drift) to fine-tune deep neural networks for transfer learning applications in almost half the time, without reducing the generalization performance of the resulting model.

This paper is joint work with Ben Leimkuhler and has been accepted to ICML 2022.

The latest version can be found on the arXiv: [paper][errata][slides][poster]